She’s almost 70, spend all day watching q-anon style of videos (but in Spanish) and every day she’s anguished about something new, last week was asking us to start digging a nuclear shelter because Russia was dropped a nuclear bomb over Ukraine. Before that she was begging us to install reinforced doors because the indigenous population were about to invade the cities and kill everyone with poisonous arrows. I have access to her YouTube account and I’m trying to unsubscribe and report the videos, but the reccomended videos keep feeding her more crazy shit.

At this point I would set up a new account for her - I’ve found Youtube’s algorithm to be very… persistent.

Unfortunately, it’s linked to the account she uses for her job.

You can make “brand accounts” on YouTube that are a completely different profile from the default account. She probably won’t notice if you make one and switch her to it.

You’ll probably want to spend some time using it for yourself secretly to curate the kind of non-radical content she’ll want to see, and also set an identical profile picture on it so she doesn’t notice. I would spend at least a week “breaking it in.”

But once you’ve done that, you can probably switch to the brand account without logging her out of her Google account.

I love how we now have to monitor the content the generation that told us “Don’t believe everything you see on the internet.” watches like we would for children.

We can thank all that tetraethyllead gas that was pumping lead into the air from the 20s to the 70s. Everyone got a nice healthy dose of lead while they were young. Made 'em stupid.

OP’s mom breathed nearly 20 years worth of polluted lead air straight from birth, and OP’s grandmother had been breathing it for 33 years up until OP’s mom was born. Probably not great for early development.

Shoutout to lead paint and asbestos maybe?

Asbestos is respiratory, nothing to do with brain development or damage.

Delete watch history, find and watch nice channels and her other interests, log in to the account on a spare browser on your own phone periodically to make sure there’s no repeat of what happened.

Oof that’s hard!

You may want to try the following though to clear the algorithm up

Clear her YouTube watch history: This will reset the algorithm, getting rid of a lot of the data it uses to make recommendations. You can do this by going to “History” on the left menu, then clicking on “Clear All Watch History”.

Clear her YouTube search history: This is also part of the data YouTube uses for recommendations. You can do this from the same “History” page, by clicking “Clear All Search History”.

Change her ‘Ad personalization’ settings: This is found in her Google account settings. Turning off ad personalization will limit how much YouTube’s algorithms can target her based on her data.

Introduce diverse content: Once the histories are cleared, start watching a variety of non-political, non-conspiracy content that she might enjoy, like cooking shows, travel vlogs, or nature documentaries. This will help teach the algorithm new patterns.

Dislike, not just ignore, unwanted videos: If a video that isn’t to her taste pops up, make sure to click ‘dislike’. This will tell the algorithm not to recommend similar content in the future.

Manually curate her subscriptions: Unsubscribe from the channels she’s not interested in, and find some new ones that she might like. This directly influences what content YouTube will recommend.

I’m a bit disturbed how people’s beliefs are literally shaped by an algorithm. Now I’m scared to watch Youtube because I might be inadvertently watching propaganda.

deleted by creator

It’s even worse than “a lot easier”. Ever since the advances in ML went public, with things like Midjourney and ChatGPT, I’ve realized that the ML models are way way better at doing their thing that I’ve though.

Midjourney model’s purpose is so receive text, and give out an picture. And it’s really good at that, even though the dataset wasn’t really that large. Same with ChatGPT.

Now, Meta has (EDIT: just a speculation, but I’m 95% sure they do) a model which receives all data they have about the user (which is A LOT), and returns what post to show to him and in what order, to maximize his time on Facebook. And it was trained for years on a live dataset of 3 billion people interacting daily with the site. That’s a wet dream for any ML model. Imagine what it would be capable of even if it was only as good as ChatGPT at doing it’s task - and it had uncomparably better dataset and learning opportunities.

I’m really worried for the future in this regard, because it’s only a matter of time when someone with power decides that the model should not only keep people on the platform, but also to make them vote for X. And there is nothing you can do to defend against it, other than never interacting with anything with curated content, such as Google search, YT or anything Meta - because even if you know that there’s a model trying to manipulate with you, the model knows - there’s a lot of people like that. And he’s already learning and trying how to manipulate even with people like that. After all, it has 3 billion people as test subjects.

That’s why I’m extremely focused on privacy and about my data - not that I have something to hide, but I take a really really great issue with someone using such data to train models like that.

Just to let you know, meta has an open source model, llama, and it’s basically state of the art for open source community, but it falls short of chatgpt4.

The nice thing about the llama branches (vicuna and wizardlm) is that you can run them locally with about 80% of chatgpt3.5 efficiency, so no one is tracking your searches/conversations.

My personal opinion is that it’s one of the first large cases of misalignment in ML models. I’m 90% certain that Google and other platforms have been for years already using ML models design for user history and data they have about him as an input, and what videos should they offer to him as an ouput, with the goal to maximize the time he spends watching videos (or on Facebook, etc).

And the models eventually found out that if you radicalize someone, isolate them into a conspiracy that will make him an outsider or a nutjob, and then provide a safe space and an echo-chamber on the platform, be it both facebook or youtube, the will eventually start spending most of the time there.

I think this subject was touched-upon in the Social Dillema movie, but given what is happening in the world and how it seems that the conspiracies and desinformations are getting more and more common and people more radicalized, I’m almost certain that the algorithms are to blame.

If youtube “Algorithm” is optimizing for watchtime then the most optimal solution is to make people addicted to youtube.

The most scary thing I think is to optimize the reward is not to recommend a good video but to reprogram a human to watch as much as possible

I think that making someone addicted to youtube would be harder, than simply slowly radicalizing them into a shunned echo chamber about a conspiracy theory. Because if you try to make someone addicted to youtube, they will still have an alternative in the real world, friends and families to return to.

But if you radicalize them into something that will make them seem like a nutjob, you don’t have to compete with their surroundings - the only place where they understand them is on the youtube.

100% they’re using ML, and 100% it found a strategy they didn’t anticipate

The scariest part of it, though, is their willingness to continue using it despite the obvious consequences.

I think misalignment is not only likely to happen (for an eventual AGI), but likely to be embraced by the entities deploying them because the consequences may not impact them. Misalignment is relative

Reason and critical thinking is all the more important in this day and age. It’s just no longer taught in schools. Some simple key skills like noticing fallacies or analogous reasoning, and you will find that your view on life is far more grounded and harder to shift

Just be aware that we can ALL be manipulated, the only difference is the method. Right now, most manipulation is on a large scale. This means they focus on what works best for the masses. Unfortunately, modern advances in AI mean that automating custom manipulation is getting a lot easier. That brings us back into the firing line.

I’m personally an Aspie with a scientific background. This makes me fairly immune to a lot of manipulation tactics in widespread use. My mind doesn’t react how they expect, and so it doesn’t achieve the intended result. I do know however, that my own pressure points are likely particularly vulnerable. I’ve not had the practice resisting having them pressed.

A solid grounding gives you a good reference, but no more. As individuals, it is down to us to use that reference to resist undue manipulation.

My normal YT algorithm was ok, but shorts tries to pull me to the alt-right.

I had to block many channels to get a sane shorts algorythm.“Do not recommend channel” really helps

Using Piped/Invidious/NewPipe/insert your preferred alternative frontend or patched client here (Youtube legal threats are empty, these are still operational) helps even more to show you only the content you have opted in to.

You watch this one thing out of curiosity, morbid curiosity, or by accident, and at the slightest poke the goddamned mindless algorithm starts throwing this shit at you.

The algorithm is “weaponized” for who screams the loudest, and I truly believe it started due to myopic incompetence/greed, not political malice. Which doesn’t make it any better, as people don’t know how to take care of themselves from this bombardment, but the corporations like to pretend that ~~they~~ people can, so they wash their hands for as long as they are able.

Then on top of this, the algorithm has been further weaponized by even more malicious actors who have figured out how to game the system.

That’s how toxic meatheads like infowars and joe rogan get a huge bullhorn that reaches millions. “Huh… DMT experiences… sounds interesting”, the format is entertaining… and before you know it, you are listening to anti-vax and qanon excrement, your mind starts to normalize the most outlandish things.EDIT: a word, for clarity

Whenever I end up watching something from a bad channel I always delete it from my watch history, in case that affects my front page too.

Huh, I tried that. Still got recommended incel-videos for months after watching a moron “discuss” the Captain Marvel movie. Eventually went through and clicked “dont recommend this” on anything that showed on my frontpage, that helped.

Log in as her on your device. Delete the history, turn off ad personalisation, unsubscribe and block dodgy stuff, like and subscribe healthier things, and this is the important part: keep coming back regularly to tell YouTube you don’t like any suggested videos that are down the qanon path/remove dodgy watched videos from her history.

Also, subscribe and interact with things she’ll like - cute pets, crafts, knitting, whatever she’s likely to watch more of. You can’t just block and report, you’ve gotta retrain the algorithm.

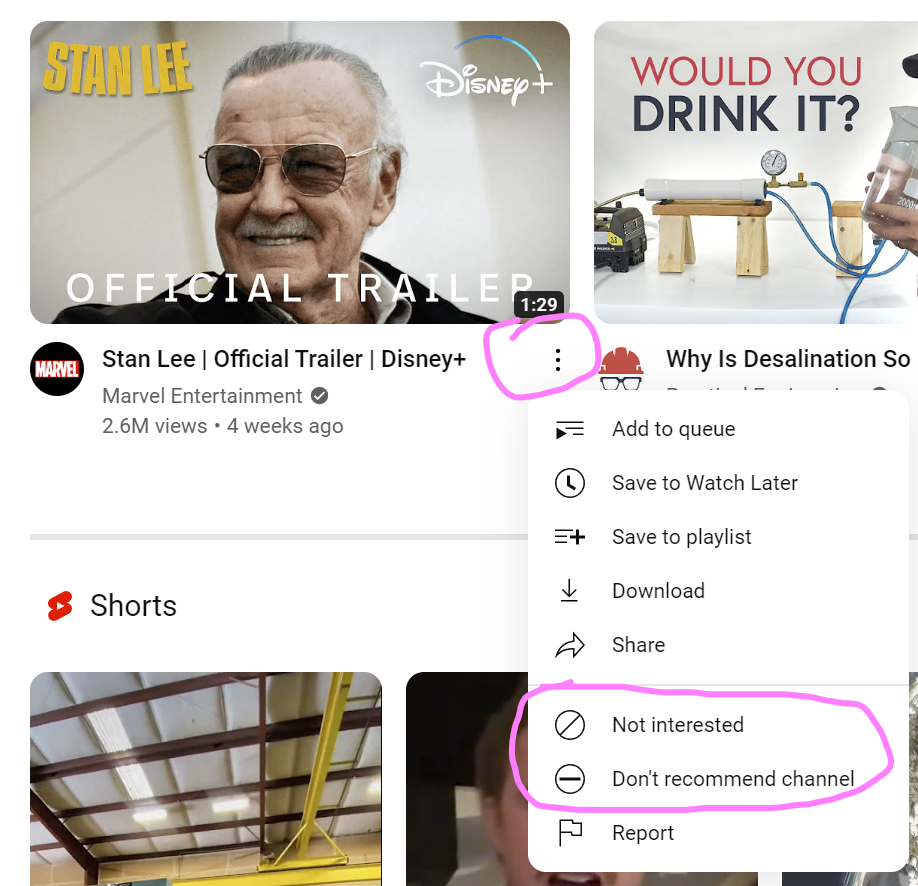

Yeah, when you go on the feed make sure to click on the 3 dots for every recommended video and “Don’t show content like this” and also “Block channel” because chances are, if they uploaded one of these stupid videos, their whole channel is full of them.

Would it help to start liking/subscribing to videos that specifically debunk those kinds of conspiracy videos? Or, at the very least, demonstrate rational concepts and critical thinking?

Probably not. This is an almost 70 year old who seems not to really think rationally in the first place. She’s easily convinced by emotional misinformation.

Probably just best to occupy her with harmless entertainment.

We recommend her a youtube channel about linguistics and she didn’t like it because the Phd in linguistics was saying that is ok for language to change. Unfortunately, it comes a time when people just want to see what already confirms their worldview, and anything that challenges that is taken as an offense.

the damage that corporate social media has inflicted on our social fabric and political discourse is beyond anything we could have imagined.

Populism and racism is as old as societies. Anciant Greece already had it. Rome fell to it. Christianism is born out of it.

Funnily enough, people always complained about how bad their society was because of this new thing. Like 5 thousand years ago already. Probably earlier even.

Which is not to say we shouldn’t do anything about it. We definitely should. But common sense won’t save us unfortunately.

This is true, but one could say the same about talk radio or television.

Talk radio or television broadcasts the same stuff to everyone. It’s damaging, absolutely. But social media literally tailors the shit to be exactly what will force someone farther down the rabbit hole. It’s actively, aggressively damaging and sends people on a downward spiral way faster while preventing them from encountering diverse viewpoints.

I agree it’s worse, but i was just thinking how there are regions where people play ONLY Fox on every public television, and if you turn on the radio it’s exclusively a right-wing propagandist ranting to tell you democrats are taking all your money to give it to black people on welfare.

Well it’s kind of true.

Actually, liberal taxes pay for rural white America.

Yes, I agree - there have always been malevolent forces at work within the media - but before facebook started algorithmically whipping up old folks for clicks, cable TV news wasn’t quite as savage. The early days of hate-talk radio was really just Limbaugh ranting into the AM ether. Now, it’s saturated. Social media isn’t the root cause of political hatred but it gave it a bullhorn and a leg up to apparent legitimacy.

Great advice in here. Now, how do I de-radicalize my mom? :(

In the google account privacy settings you can delete the watch and search history. You can also delete a service such as YouTube from the account, without deleting the account itself. This might help starting afresh.

I was so weirded out when I found out that you can hear ALL of your “hey Google” recordings in these settings.

Yeah, anything you send Google/Amazon/Facebook they will keep. I have been moving away from them. Protonmail for email, etc.

I had to log into my 84-year-old grandmother’s YouTube account and unsubscribe from a bunch of stuff, “Not interested” on a bunch of stuff, subscribed to more mainstream news sources… But it only works for a couple months.

The problem is the algorithm that values viewing time over anything else.

Watch a news clip from a real news source and then it recommends Fox News. Watch Fox News and then it recommends PragerU. Watch PragerU and then it recommends The Daily Wire. Watch that and then it recommends Steven Crowder. A couple years ago it would go even stupider than Crowder, she’d start getting those videos where it’s computer voice talking over stock footage about Hillary Clinton being arrested for being a demonic pedophile. Luckily most of those channels are banned at this point or at least the algorithm doesn’t recommend them.

I’ve thought about putting her into restricted mode, but I think that would be too obvious that I’m manipulating the strings in the background.

Then I thought she’s 84, she’s going to be dead in a few years, she doesn’t vote, does it really matter that she’s concerned about trans people trying to cut off little boy’s penises or thinks that Obama is wearing ankle monitor because he was arrested by the Trump administration or that aliens are visiting the Earth because she heard it on Joe Rogan?

I did the same thing, although putting some videos with other things will declutter the algorithm too

I too faced this dilemma. So I uninstalled every ad blocker and made it very tedious videos. It kinda helped.

Sorry to hear about you mom and good on you for trying to steer her away from the crazy.

You can retrain YT’s recommendations by going through the suggested videos, and clicking the ‘…’ menu on each bad one to show this menu:

(no slight against Stan, he’s just what popped up)

click the Don’t recommend channel or Not interested buttons. Do this as many times as you can. You might also want to try subscribing/watching a bunch of wholesome ones that your mum might be interested in (hobbies, crafts, travel, history, etc) to push the recommendations in a direction that will meet her interests.

Edit: mention subscribing to interesting, good videos, not just watching.

You might also want to try watching a bunch of wholesome ones that your mum might be interested (hobbies, crafts, travel, history, etc) in to push the recommendations in a direction that will meet her interests.

This is a very important part of the solution here. The algorithm adapts to new videos very quickly, so watching some things you know she’s into will definitely change the recommended videos pretty quickly!

OP can make sure this continues by logging into youtube on private mode/non chrome web browser/revanced YT app using their login and effectively remotely monitor the algorithm.

(A non chrome browser that you don’t use is best so that you keep your stuff seperate, otherwise google will assume your device is connected to the account which can be potentially messy in niche cases).

Here is what you can do: make a bet with her on things that she think is going to happen in a certain timeframe, tell her if she’s right, then it should be easy money. I don’t like gambling, I just find it easier to convince people they might be wrong when they have to put something at stake.

A lot of these crazy YouTube cult channels have gotten fairly insidious, because they will at first lure you in with cooking, travel, and credit card tips, then they will direct you to their affiliated Qanon style channels. You have to watch out for those too.

My sister always mocked her and starts to fight her about how she has always been wrong before and she just get defensive and has not worked so far, except for further damage their relationship.

See if you can convince her to write down her predictions in a calendar, like “by this date X will have happened”.

You can tell her that she can use that to prove to her doubters that she was right and she called it months ago, and that people should listen to her. If somehow she’s wrong, it can be a way to show that all the things that freaked her out months ago never happened and she isn’t even thinking about them anymore because she’s freaked out about the latest issue.

Going to ask my sister to do this, it can be good for both of them.

It’s not going to work.

You can’t reason someone out of a position they didn’t reason themselves into. You have to use positive emotion to counteract the negative emotion. Literally just changing the subject to something like “Remember when we went to the beach? That was fun!” Positive emotions and memories of times before the cult brainwashing can work.

The good part about it is that once she writes her predictions down (or maybe both of them do), they can maybe move on, and talk about something other than the conspiracies.

Assuming all the predictions are wrong, especially if your mom forgot how worked up she was about them months ago but completely forgot about them after that, it can be a good way to talk about how they’re manipulating her emotions.

it took a total of one hour of youtube autoplay for me to go from “here’s how to can your garden veggies at home” to “the (((globalists))) want to outbreed white people and force them into extinction”

they will at first lure you in with cooking, travel, and credit card tips

Holy crap. I had no idea. We’ve heard of a slippery slope, but this is a slippery sheer vertical cliff.

Like that toxic meathead rogan luring the curious in with DMT stories and the like, and this sounds like that particular spore has burst and spread.Rogan did not invent this, this is the modern version of Scientology’s free e-meter reading, and historical cults have always done similar things for recruitment. While you shouldn’t be paranoid and suspicious of everyone, but you should know that these things exists and watch out for them.

this is the modern version of Scientology’s free e-meter reading

I actually have a fun story about that. They once had a booth on my college campus so just for fun I let them hook up their e-meter to me. I was extremely dubious that this device did what it claimed, but just for fun to mess with it I tried as hard as I can to think calm and relaxing thoughts. To my amazement, the needle actually went down to the “not stressed” end, so I’ve gone from thinking that the e-meter is almost certainly bunk to thinking that it is merely very probably bunk.

That isn’t the funny part, though. The funny part was that the person administering the test got really concerned and said that the device wasn’t working properly and had me take the test again. I did so, and once again the needle went down to the “not stressed” end. The person administering the test then apologized profusely that the device was clearly not working and said that they nonetheless recommended that I take their classes to deal with the stress in my life. So the whole experience was absolutely hilarious, although at the same time incredibly sad because I strongly suspect that the people at the booth weren’t saying these things in order to deceive me but because they were genuinely true believers who were incapable of seeing the plain truth even when it stared them in the face.

it’s a skin galvanometer. It measures sweating directly based on the fact that sweat is electrically conductive, then interprets more sweat as more stress. this is a fallacy as the fact that stressed people tend to sweat does NOT imply that sweaty people tend to be stressed. this works to the advantage of scientologists because genuinely stressed people will measure high, but so will a lot of unstressed people or people who are only stressed by the fact that they suddenly find themselves in an experiment. False positives and true positives are much more common than true or false negatives, and also much more profitable for scientologists. When you successfully beat the test, the person administering it insisted you go again because it’s kinda rigged and they assumed that a second reading would come back with the needle pointing strongly toward “give us a bunch of money”.

this is the central fallacy behind lie detectors as well, as they measure skin galvanic response, heart rate, and other things that are correlated with stress but can have myriad other causes, then they assume that people are stressed when they lie, then they take a flying leap to the conclusion that anyone displaying symptoms of stress must be lying.

I think it’s sad how so many of the comments are sharing strategies about how to game the Youtube algorithm, instead of suggesting ways to avoid interacting with the algorithm at all, and learning to curate content on your own.

The algorithm doesn’t actually care that it’s promoting right-wing or crazy conspiracy content, it promotes whatever that keeps people’s eyeballs on Youtube. The fact is that this will always be the most enraging content. Using “not interested” and “block this channel” buttons doesn’t make the algorithm stop trying to advertise this content, you’re teaching it to improve its strategy to manipulate you!

The long-term strategy is to get people away from engagement algorithms. Introduce OP’s mother to a patched Youtube client that blocks ads and algorithmic feeds (Revanced has this). “Youtube with no ads!” is an easy way to convince non-technical people. Help her subscribe to safe channels and monitor what she watches.

Not everyone is willing to switch platforms that easily. You can’t always be idealistic.

That’s why I suggested Revanced with “disable recommendations” patches. It’s still Youtube and there is no new platform to learn.

Ooh, you can even set the app to open automatically to the subscription feed rather than the algo driven home. The app does probably need somebody knowledgable about using the app patcher every half-dozen months to update it though.

Delete all watched history. It will give her a whole new sets of videos. Like a new algorithms.

Where does she watch he YouTube videos? If it’s a computer and you’re a little techie, switch to Firefox then change the user agent to Nintendo Switch. I find that YouTube serve less crazy videos for Nintendo Switch.

change the user agent to Nintendo Switch

You mad genius, you

This never worked for me. BUT WHAT FIXED IT WAS LISTENING TO SCREAMO METAL. APPARENTLY THE. YOURUBE KNEW I WAS A LEFTIST then. Weird but I’ve been free ever since

In addition to everything everyone here said I want to add this; don’t underestimate the value in adding new benin topics to her feel. Does she like cooking, gardening, diy, art content? Find a playlist from a creator and let it auto play. The algorithm will pick it up and start to recommend that creator and others like it. You just need to “confuse” the algorithm so it starts to cater to different interests. I wish there was a way to block or mute entire subjects on their. We need to protect our parents from this mess.

I just want to share my sympathies on how hard it must be when she goes and listens to those assholes on YouTube and believes them but won’t accept her family’s help telling her what bullshit all that is.

I hope you get her out of that zone op.